Authenticating Access to Private Content Hosted with AWS CloudFront

Setting up a static website, uploading it into an S3 bucket that serves as the origin of a CloudFront distribution is a simple and cheap solution. But what if you want to control access to the content? HTTP basic authentication no longer seems to work and is clumsy and ugly anyways. But signed cookies give you a better solution at hand and it is easy to implement with serverless computing.

Table Of Contents

curl

/index.html Problem

Available Options for Authentication with CloudFront

HTTP Basic Authentication

You know these unstyled dialog boxes popping up on some websites that ask you for a username and password? They are likely using HTTP basic authentication. It is a reasonably simple approach to require authentication for a website and several recipes can be found on the internet describing how you can set up basic authentication with AWS Lambda respectively AWS Lambda@Edge functions.

I have created such a site with the Lambda environment Node 14.x some time ago and it is still up and running. But when I tried to do the same again with a new site using Node 20.x, it no longer worked. The problem is that the approach requires the Lambda function to read out the Authorization header of the request, and AWS seems to no longer expose this header to Lambda (actually Lambda@Edge) functions.

About Signed Cookies and Signed URLs

Amazon's recommendation for controlling access to CloudFront distributions is to restrict viewer access. With that in place, CloudFront will check that either a special set of URL query parameters are present or that the browser sends a set of cookies, one of them containing an encrypted signature of the access policy. If the signature can be verified, access is granted. Otherwise a 403 is sent.

Signing URLs is quite easy but is only feasible if you want to control access to single files. If you want to grant access to the entire website or parts thereof, you would rather use signed cookies.

It is also not to hard to generate a signed cookie but how do you import it into a browser? This is surely possible but not exactly user-friendly. Therefore some way of setting these cookies programmatically has to be found.

While researching the topic, I stumbled over the two-part blog post Signed cookie-based authentication with Amazon CloudFront and AWS Lambda@Edge that outlined a technique that worked even without using credentials at all.

The building blocks of the solution were:

- Viewer access is restricted by default for the whole site.

- Only the relative URLs

/login.html,/login, and/assetsare publicly accessible. - The page

/login.htmlis the error page for all 403 errors. - The form action of

/login.htmlis the endpoint/login?email=EMAIL. - The endpoint

/logintriggers a Lambda@Edge function that checks whether the supplied email address is acceptable. - If the check succeeds, a signed URL to the endpoint

/authis generated and sent to the email address given. - The user clicks the link in the email and another Lambda@Edge function triggered by the

/authendpoint sets a set of signed cookies. - The user is redirected to the start page

/and now has access to the entire site, authenticated by the these cookies.

You can, of course, try to follow the instructions from the blog post by the two Amazon engineers linked above. I found that they are somewhat outdated and wanted to change some details. For example, they only check the domain part of the email addresses because they had a company with one single domain in mind but I want to whitelist individual email addresses.

Security

Did you notice that no credentials are involved in the protocol? Can that be safe? In fact it is just as secure as the conventional "forgotten password" flow: You give your email address and click, a mail with a signed password reset link is sent, and whoever has access to your mailbox can follow the link and reset the password.

The security of that flow is based on the assumption that people protect their mailbox. Another aspect is that the security of the password is totally irrelevant because the "forgotten password" allows an attacker to change it to whatever they like. But why should users then create a password in the first place?

However, it should not go unmentioned that the vast majority of emails are sent unencrypted over the internet. Even if two MTAs (mail transfer agents aka mail servers) communicate directly, the IP traffic still goes over various hops and many people are able to sniff that traffic and are able to intercept signed URLs but that is at least as much a problem for the authentication flow described here as it is for every site that has a password recovery by email function.

If you feel that your security demands are higher, feel free to not use the email trick described here but store usernames and password digests in AWS Secure Store or Systems Manager. It will actually become easier. But in order to improve security, I would rather think about two factor authentication instead.

Prerequisites

AWS Account

You need, of course, an AWS account. You can actually use AWS for free for up to 12 months, see the AWS free tier. Even, if you have used up your free tier, experiments like the one described here will hardly cost more than a couple of cents.

Static Website

Then you will need a static web site. A popular option for managing a static web sites are static site generators, and I recommend Qgoda because I am the author. ;)

For the sake of this step-by-step guide, however, we will create a bunch of files by hand. We want to have the following structure:

/

+- 404-not-found.html

+- assets/

| +- styles.css

+- index.html

+- login.html

+- login-status.html

+- other/

+ index.html

We make the assumption that the site has a top-level directory /assets that just contains JavaScript, CSS, fonts, and other assets that are not worth protecting. We want to make sure that even unauthenticated users are able to request this content, so that for example the login page can still be styled.

Create an empty directory and inside of it, create the home page of the site index.html:

<link rel="stylesheet" href="/assets/styles.css" />

<h1>You are in!</h1>

<p>You can also visit <a href="/other/index.html">the other page</a>.Yes, that is really ugly HTML code but your browser is used to hardship and will accept it without grumbling. But feel free to write proper HTML instead.

Next, we create the stylesheet assets/styles.css:

body {

font-family: sans-serif;

background-color: lightblue;

}We just want to see that the stylesheet is loaded by changing the background colour to light blue and the font to one without serifs.

Now write the login page login.html:

<link rel="stylesheet" href="/assets/styles.css" />

<form action="/login">

<label for="email">Email</label>

<input type="text" id="email" name="email"

placeholder="Enter your email address">

<input type="submit" value="Log in">

</form>The action attribute of the form points to /login, the endpoint that processes the form data.

We also want a status page login-status.html that is shown after a login attempt:

<link rel="stylesheet" href="/assets/styles.css" />

<p>

If you have entered a valid email address and you are an authorized user of

the site, you will receive an email with a link that grants you access for

the next 30 days!

</p>Since we want to "surf" the site in the end, we need at least one more page other/index.html:

<link rel="stylesheet" href="/assets/styles.css" />

<h1>Thanks for Visiting the Other Page!</h1>

<p>You can also visit the <a href="/">home page</a>.And then there are broken links. So create one more page 404-not-found.html:

<link rel="stylesheet" href="/assets/styles.css" />

<h1>404 Not Found</h1>

<p>Go to <a href="/">home page</a>!.S3 Bucket

Amazon S3 is a cloud object storage. With S3, you are actually just a mouse-click away from a static website by enabling the static website hosting feature. But that will not allow us to have authentication.

Anyway, S3 will serve as the "origin" for our site so we have to create a new storage, called "bucket" in S3 lingo.

- Log into the S3 console.

- Click

Create Bucket. You can select whatever region you want. The name has to be unique within that region, "test" will probably not work but "www.example.com" will, if you replace "example.com" with a domain that you own. Otherwise just invent a name. - Leave all other settings as they are and save by clicking

Create bucketat the bottom.

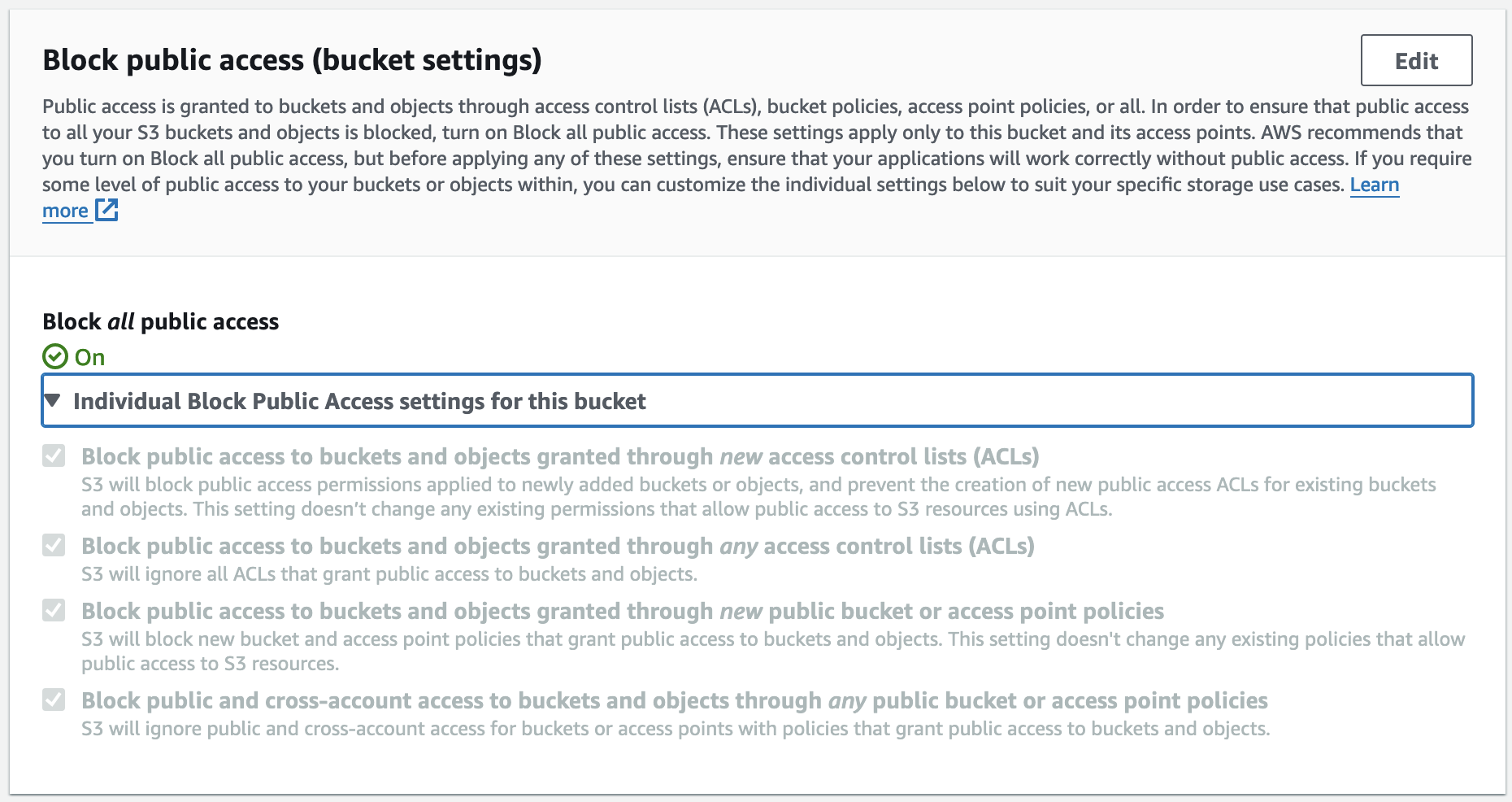

It is important that you block all public access to the bucket. The relevant section should look like this:

You will now see an overview of all of your buckets, otherwise choose Buckets in the menu at the left of the page. A click on the name of your new bucket will bring you to its object listing. Because your bucket is still empty, click on Upload, and either drag and drop the files and directories that you have created before (index.html, login.html, ...) into the upload area, or click Add files, and select them for upload.

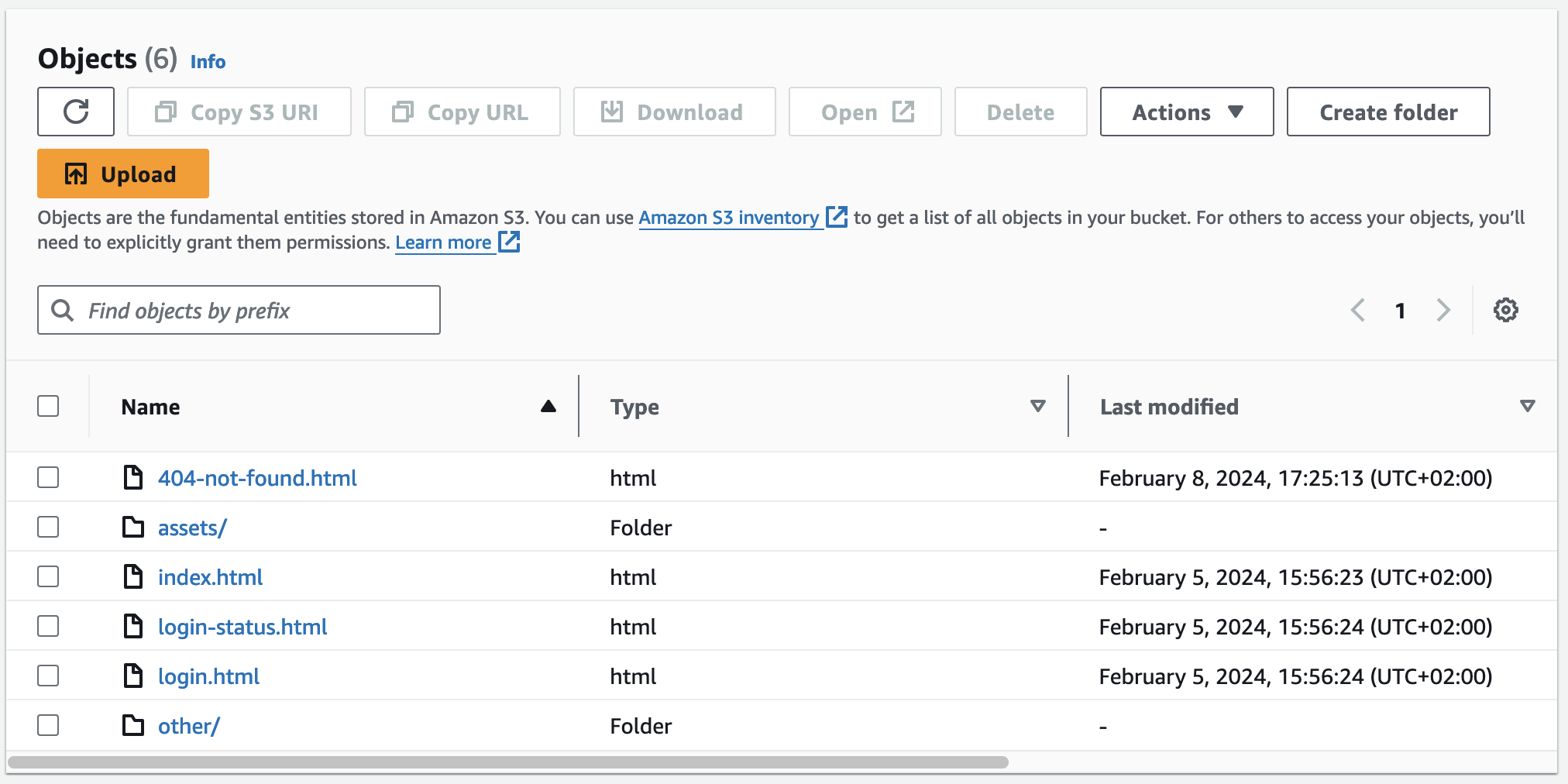

When the upload is done, go back to the bucket overview that should now look like this:

CloudFront Distribution

Amazon CloudFront is Amazon's content delivery network. You provide the actual website content (called the origin) either in an S3 bucket or any other website, and CloudFront makes it available on servers that are distributed all over the world so that a server that is close to the visitor can be selected. These servers are called "edges" or "edge servers" in CloudFront lingo.

One such content collection is called a distribution and has an address of the form https://abcde123.cloudfront.net where the hostname part abcde123 is a uniquely assigned hostname in the cloudfront.net domain. You can optionally use your own domain name as an alias, see below.

We will now create a distribution:

- Log into the CloudFront console.

- Click on

Create Distributionto create a new distribution. - In

Origin | Origin domain, select the S3 bucket that you have created above. - Leave the

Origin | Origin pathempty (or know what you are doing). - Scroll down to

Default Cache Behaviour | Viewer | Viewer protocol policyand selectRedirect HTTP to HTTPS(or choose what you prefer). - Do not yet enable

Default Cache Behaviour | Viewer | Restrict Viewer Access. - Under

Default Cache Behaviour | Cache Key and origin requestsleaveCache policy and origin request policy (recommended)but for theCache policyselect theCachingDisabledpolicy! - Under

Web Application Firewall (WAF)choose what you like; the attack surface of a static site is pretty small and maybe does not that extra protection. - Leave everything else unchanged and click on

Create Distributionto finish the process.

You should now be redirected to the distributions overview and see your new distribution. Its status should be "Enabled" and there is no last modification date but a message saying "Deploying ...". The meta information of the distribution is now distributed to the various edges. After a couple of minutes the string "Deploying ..." should be replaced with the date and time of the last modification.

Why not restrict the viewer access? We first want to try out whether the setup actually works and, besides, it is maybe not the smartest idea to already upload sensitive content while you are still fiddling around with the authentication.

And why disable caching? Don't worry! This will also be changed later. The cache is very strict, and it even caches errors (which is the desired behaviour). But it is almost inevitable that you will make mistakes in the setup and you can make your life easier by not caching anything for the time being because then you can simply fix the errors and try again.

Time for a quick test. Click on the name of yoru distribution, then the copy icon next to the hostname under Details | Distribution domain name and paste the address into the address bar of your browser. You should now see the html page index.html from the S3 bucket, and you should also be able to follow the link inside that page.

If something does not work as expected, please have a look at Amazon's documentation page Use an Amazon CloudFront distribution to serve a static website which gives up-to-date instructions for our use case.

Optional: Setting Up an Alternate Hostname

You may not be happy with an Amazon assigned address like https://abcde12345.cloudfront.net/. You can register a domain, get a wildcard certificate for it for free, and configure it as an alternate hostname for your cloudfront distribution. This is described in great detail in the document Use an Amazon CloudFront distribution to serve a static website.

In the following, we will assume that you have done so, and refer to our static site with the name https://www.example.com/. Replace that with the Amazon assigned address if you decide to not use your own domain name.

About Signed URLs and Signed Cookies

When you restrict viewer access to a CloudFront distribution, visitors must either use signed URLs or signed cookies. If no signature is provided or the signature cannot be verified, access is denied.

So how does signing a request and verifying the signature work in general? Signed URLs are easier to understand. So let's look at them first.

Amazon uses "policies" to control access. A typical policy for CloudFront viewer access looks like this:

{

"Statement":

[

{

"Resource": "https://www.example.com/search?q=fun",

"Condition":

{

"DateLessThan":

{

"AWS:EpochTime": 1706860856

}

}

}

]

}That basically translates to: You are allowed to visit https://www.example.com/search?q=fun until 1706860856 seconds after Jan 1st, 1970 00:00:00 GMT.

For creating a signature, this policy is first normalized by removing all whitespaces (space, tabs, newlines) so that it consists just of a single line without any whitespace. This is still valid JSON.

You may have noticed, that there are actually two equivalent versions of this piece of JSON. The keys Resource and Condition could be swapped because the keys of an object in JSON are not ordered. If you need JSON to be deterministic, the convention of "canonical" JSON exists, where all keys of an object appear in alphanumerical order. That slows down encoding JSON but is a straightforward method to make JSON deterministic and round-trip-safe.

It will remain the secret of the AWS developers why Amazon did not decide to follow that convention and rely on an order that is not alphanumeric. That does not only slow down the encoding of the JSON policy but it makes it impossible to use any JSON generating library at all. Anyway, we have to live with that flaw.

Now that the policy is "normalized" it is hashed into an SHA-1 digest which is then symmetrically encrypted. The encrypted data is binary, and therefore gets base64-encoded. But since the character +, /, and = have special meanings in URLs they are replaced by -, ~, and _ into URL-safe Base64.

By the way, this policy that restricts access to exactly one URL to an exact point of time is called a canned policy by Amazon and it is crucial that it is encoded in exactly the way as described above, including the order of the keys.

The browser now sends a request where all information contained in the encrypted policy is also available in cleartext, something like this (distributed over several lines for improved readability):

https://www.example.com/search?q=fun\

&Expires=1706860856\

&Key-Pair-Id=ABCDEFG0123456\

&Signature=SIGNATURE-AS-URL-SAFE-BASE64

Scroll up to see that there are actually just two pieces of information contained in the policy, the resource which is the base URL and the expiry date as second since the epoch. Both of them are also visible in the signed URL. The base URL is the origin, path, and query string of the URL without the three parameters Expires, Key-Pair-Id, and Signature that have been added by the signing procedure.

The expiry date is simply added as the query parameter Expires.

CloudFront is therefore capable of recreating the policy, and it can then verify the signature. Okay, wait, the digest of the policy had been encrypted with a private key, and Amazon therefore needs the corresponding public key to verify the signature. You have to upload the public key to AWS CloudFront first, and Amazon is able to find it by the Key-Pair-Id that you have transferred as a URL parameter.

Why key pair? Keys always come in pairs of a private key and a public key that is retrieved from the private key. The public key is sufficient for verifying the signature and therefore, you normally do not upload the private key to AWS. For our use case we do have to but that is a different story, see below.

Anyway, the Key-Pair-Id is rather a Public-Key-Id but Amazon chose to call it differently.

In a nutshell, the protocol now goes like this:

- You create a key pair and upload the public key to CloudFront where it is assigned a key pair id.

- You sign a URL with your private key to allow access to the URL to some point in time in the future.

- You share that URL with whoever you want.

- That "whoever" sends a request to that base URL claiming that they have the rights to access that URL until a certain point in time in the future.

- Amazon only grants access, when the signature that is part of the signed URL matches the information given in cleartext.

Signed cookies work in exactly the same way, only that the information provided is contained in cookies and not as URL parameters.

Another difference between processes for signed URLs and signed cookies is that signed cookies - normally - use custom policies as opposed to canned policies with a fixed structure as used above. Custom policies are totally flexible and can contain arbitrary rules. The signature and the public key id sent along with the encoded policy ensure that is has not been tampered.

Setting Up Viewer Restrictions

Next we will see how to set the site up for using signed URLs and signed cookies.

Creating a Key Pair

Although you could use ssh-keygen, I will try to stick with openssl for creating everything you need for setting up restricted viewer access for CloudFront.

Amazon is pretty strict about the details of the cryptographic keys to use, see their documentation:

- It must be an SSH-2 RSA key pair.

- It must be in base64-encoded PEM format.

- It must be a 2048-bit key pair.

And this is how you create and store it in a file private_key.pem:

$ openssl genrsa -out private_key.pem 2048

Now extract the public key from the private key like this:

$ openssl rsa -pubout -in private_key.pem -out public_key.pem

With the output file public_key.pem, Amazon is now able to verify that you have created a particular signature with the private key generated above, although Amazon normally does not have access to that private key.

Uploading the Public Key

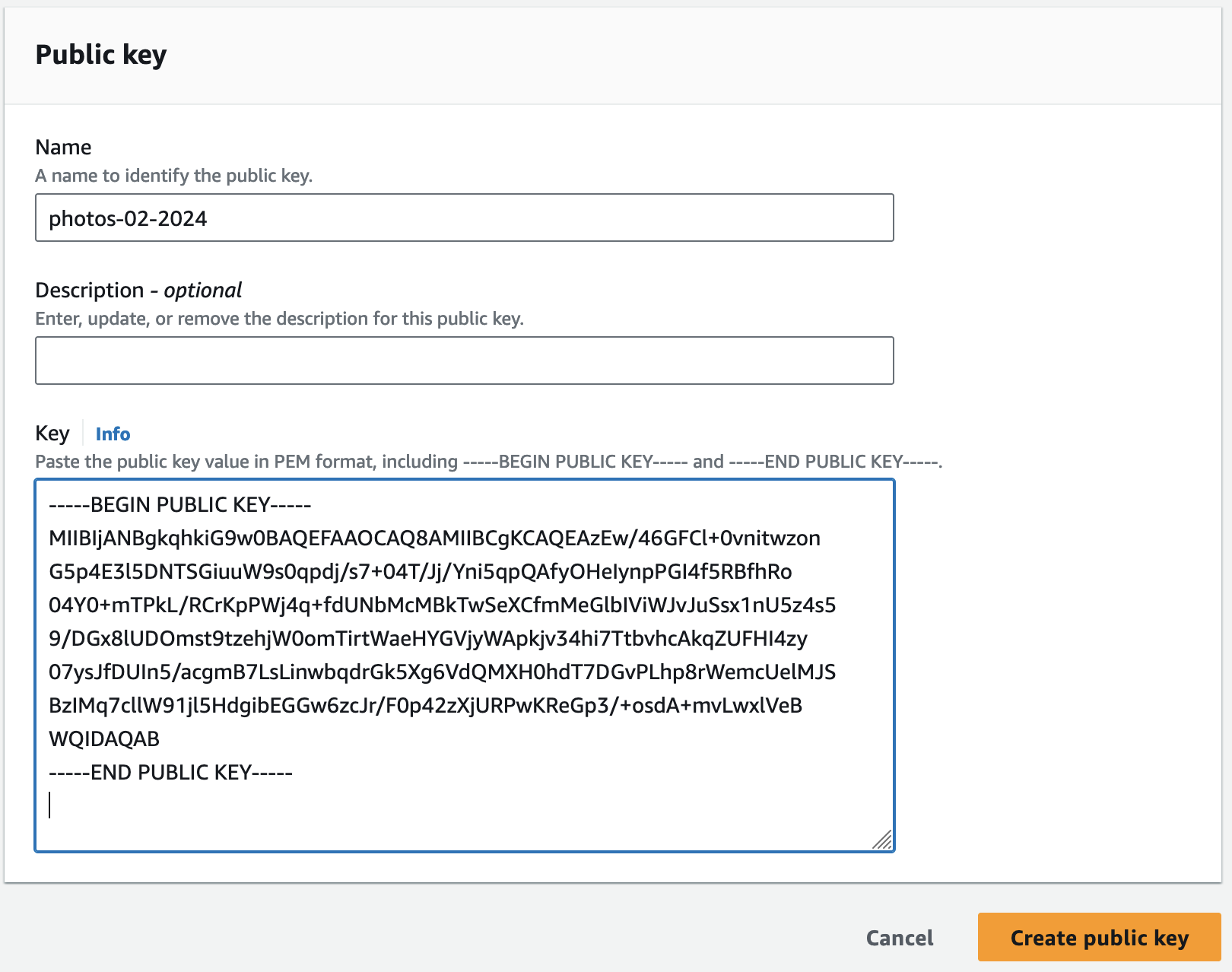

It is time now to let Amazon know the public key so that it can verify the signatures that we will subsequently produce. Log into the Amazon Cloudfront Console, go to Key Management | Public Keys, and click the orange button Create public key. Well, we already know that you cannot create a public key but only extract it from a private key, but who cares?

In the form that shows up you are being asked for a name and the actual key. For the name, I suggest something that includes the current date, for example photos-02-2024. Why? Because it is a good idea to change your keys from time to time.

Your public key is currently saved in a file public_key.pem. Open it in an editor of your choice, and then copy and paste it into the Key field of the form.

Save by clicking Create public key. In the overview of public keys that is shown next, you will see that AWS has assigned it an id like K88DNWP2VR6KE that is unique to your CloudFront distribution.

Creating a Key Group

What is a Key Group?

As mentioned above, it may be a good idea to change keys from time to time, in order to prevent the keys from being compromised. AWS embraces that pattern by the concept of key groups. When you protect content in CloudFront you do not specify a particular key but a key group. Every now and then you should create a new key pair and upload the public key into the key group as described above. You will always sign URLs and cookies with the latest key that you have created but every key from the key group is sufficient to verify a signed URL or cookie. Once, the transition is done, you can phase phase out the old key, and only use the new one.

That being said, you can only specify a trusted key group, and not a particular trusted public key id (aka key-pair id) for protecting a CloudFront distribution, and this is done as follows.

Log into the Amazon Cloudfront Console, go to Key management | Key groups, select Create key group, give your key group a name, and select the public keys that should be used for verifying signatures. My advice is to choose a generic name without a date like photos as this will be a long-term container of trusted keys for this collection of content. For the time being, you will only add the single one key that we have just created.

Restricting Viewer Access

Now that we have a key, we can restrict access to our content. Go to the CloudFront console and select Distributions, the top-level menu entry. Click on the distribution that you want to access and go to the behaviours tab.

At the moment there is just one behaviour Default (*). Select it, and click Edit. Go to Viewer | Restrict viewer access and change to yes. For the trusted authorization type, select trusted key groups, and add the key group that you have created in the last step. Save your changes and wait a couple of minutes until the status of the distribution changes from Deploying ... to the last modification date.

Open the distribution in the browser, and now you should get a 403 with an XML error message, showing you that access is denied now.

Behaviours

A behaviour for Cloudfront is a set of rules for a certain part of your site. Its equivalent for nginx or Apache would be a location section. We will now create a couple of such behaviours. Let us recap our authentication flow so that we can find out which behaviours we need:

The starting point is /login.html where people enter their email address. This URL must be publically available and so we create a second behaviour for /login*.html. We use a wildcard, so that we can also use a public confirmation page /login-status.html.

The action attribute of the login form points to /login and we create a third behaviour for it, public again. This behaviour is not associated with any content but will only trigger a Lambda function. That Lambda function checks the entered email address, and sends a signed confirmation URL to the user's inbox.

The confirmation URL points to /auth and must be restricted. It is also not associated with any content but will just trigger a second Lambda function that will will set three signed cookies, redirect to the start page /, and the user can now surf the site without restrictions.

We can also add a /logout behaviour, that is associated with a Lambda that unsets the cookie again and redirects to the login page.

All pages of the site may contain CSS, JavaScript, fonts and so on that should all be located under /assets/*. This area should also be publicly available but you must make sure that it does not contain sensitive information.

All in all we need five behaviours in addition to the default behaviour for *.

Default Behaviour /*

Before we start creating new behaviours, we should have another look at the default (fallback) behaviour. In the beginning we have said that you should check Redirect HTTP to HTTPS for the viewer protocol policy. This is mostly for the visitor convenience so that they can enter the URL without a protocol. Likewise, when URLs without a protocol, like www.example.com are automatically converted into links by web authoring software, they are almost always converted into HTTP links, not HTTPS links.

But that has a security glitch. Imagine we would automatically upgrade requests to /login from HTTP to HTTPS. We could create a form with a form action of http://www.example.com/login. The login credentials would then be sent in cleartext and that is deemed insecure.

What is the recommended setting? Clearly "HTTPS only", especially, when you have not configured an alternative domain. Would somebody really enter something like k4k20dk0pmn3jh.cloudfront.com into the browsers address bar? No, these types of URLs will be shared via other channels, and people just click them.

Still, it is sufficiently secure to upgrade requests for the public areas of your website except for all URLs that are involved in the authentication flow. Everything else should be HTTPS only.

And while you are at it, you should also enable HTTP Strict Transport Security, and fortunately, CloudFront has a canned policy for this protocol. Select the default behaviour *, scroll down to Cache key and origin requests | Response headers policy, select SecurityHeadersPolicy and save your changes. This setting will add the Strict-Transport-Security header with a maximum age of one year plus a bunch of other security improving headers to all response.

Behaviour for /assets/*

Next create a new behaviour for /assets/* or wherever you host insensitive resources like CSS, JavaScript, fonts and so on. You must add your S3 bucket as the origin group, select HTTPS only for the viewer protocol policy, leave the setting for Restrict viewer access as "No", and select the SecurityHeadersPolicy. This policy should be selected for all of your behaviours.

If you have more locations for publicly available resources, you have to add a behaviour for all these locations.

Behaviour for /login*.html

Follow the exact same steps as for /assets/* above.

Behaviour for /login

This is the URL that login requests are sent to. It should now be clear that you must select HTTPS only but do not restrict viewer access. Otherwise people will not be able to send their login credentials.

Behaviours for /auth and /logout

Follow the same steps as for /login.

If you try out the new behaviours, keep in mind that responses may come from the cache. In doubt, create an invalidation for /* (or more specific URLs) and wait for it to complete.

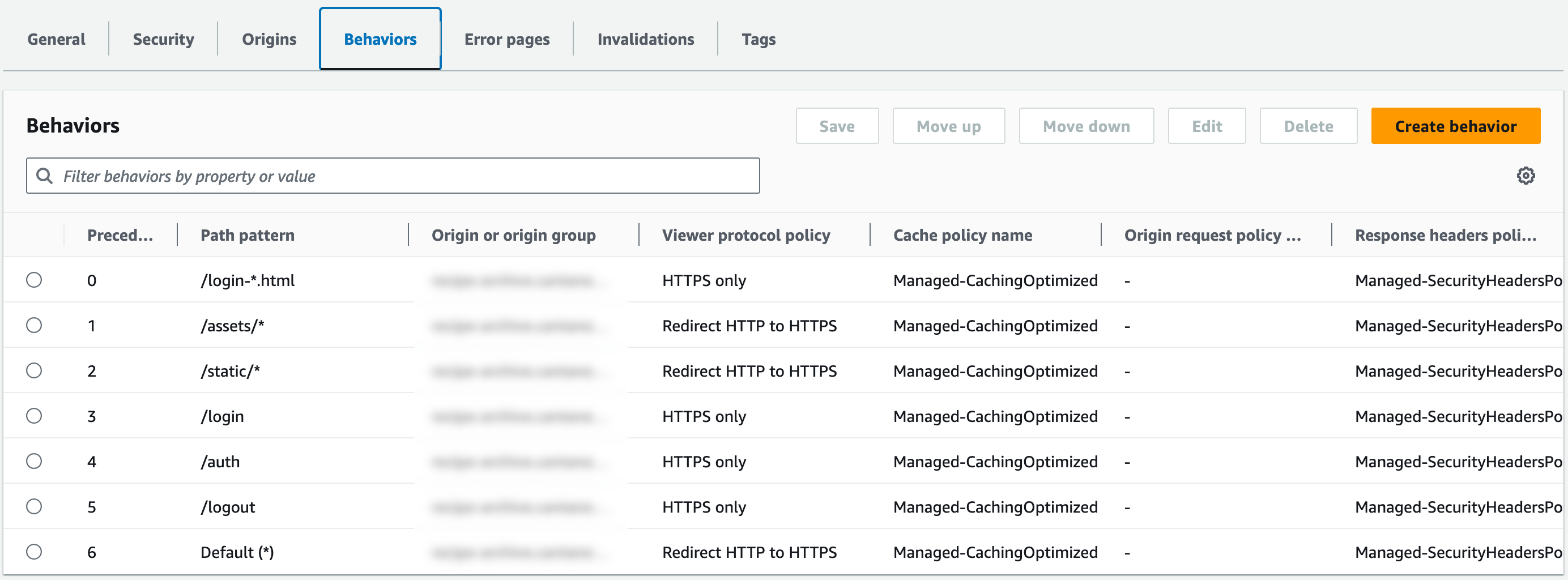

When you click the behaviour tab you should now see something like this:

The screenshot shows a second directory with publicly available assets /static/ that you probably do not need.

The Login Lambda Function

We can now start coding. We will use the latest Node.js runtime (20.x at the time of this writing) but you should be able to easily port the same functionality into every other supported language.

Code

Go to the AWS Lambda Console and click the orange Create function button. It is crucial that you create the function in the us-east-1 region N. Virginia. The reason is that the function must run on the edge servers and there are certain restricutions for Lambda@Edge functions, one of them being that they must be created in the us-east-1 region.

Leave Author function from scratch selected, name it something like yourSiteLogin, choose the latest Node.js runtime, and hit Create function.

The next screen is divided into the Function overview at the top and some kind of crude IDE at the bottom. For now make sure that you select the Code tab. In the left pane you see the files belonging to your Lambda function, at the moment just one, index.mjs and the source code in the right pane.

Why index.mjs and not index.js? Nowadays, JavaScript Lambda functions are modules and use ES6 syntax. Old code examples that use CommonJS, like the code from the blog post of the two Amazon engineers mentioned above, no longer work inside an index.mjs handler.

You can copy and paste the following code overwriting the hello-world example.

import { SESv2Client, SendEmailCommand } from '@aws-sdk/client-sesv2';

import { SSMClient, GetParameterCommand } from '@aws-sdk/client-ssm';

import { getSignedUrl } from '@aws-sdk/cloudfront-signer';

const emailSender = 'you@example.com';

const hostname = 'www.example.com';

const prefix = 'photos';

const region = 'us-east-1';

const authUrl = `https://${hostname}/auth`;

const loginUrl = `https://${hostname}/login.html`;

const statusUrl = `https://${hostname}/login-status.html`;

const response = {

status: '302',

statusDescription: 'Found',

headers: {

location: [{ key: 'Location', value: statusUrl }],

'cache-control': [{ key: 'Cache-Control', value: 'max-age=100' }],

},

};

const cache = {}

const ses = new SESv2Client({ region });

const ssm = new SSMClient({ region });

export const handler = async (event, context) => {

const request = event.Records[0].cf.request;

const parameters = new URLSearchParams(request.querystring);

if (!parameters.has('email') || parameters.get('email') === '') {

return {

status: '302',

statusDescription: 'Found',

headers: {

location: [{ key: 'Location', value: loginUrl }],

'cache-control': [{ key: 'Cache-Control', value: 'max-age=100' }],

},

};

}

if (typeof cache.registeredEmails === 'undefined') {

const registeredEmails = await getParameter('registeredEmails', true);

cache.registeredEmails = registeredEmails.split(/[ \t\n]*,[ \t\n]*/);

}

cache.keyPairId ??= await getParameter('keyPairId');

cache.privateKey ??= await getParameter('privateKey', true);

const email = parameters.get('email');

if (cache.registeredEmails.includes(email.toLowerCase())) {

await sendEmail(cache.keyPairId, cache.privateKey, email);

}

return response;

};

const getParameter = async (relname, WithDecryption = false) => {

const Name = `/${prefix}/${relname}`

const command = new GetParameterCommand({Name, WithDecryption});

try {

const response = await ssm.send(command);

return response.Parameter.Value;

} catch (error) {

console.error(`Error retrieving parameter '${Name}': `, error);

throw error;

}

};

const sendEmail = async(publicKey, privateKey, email) => {

const expires = new Date(new Date().getTime() + 60 * 10 * 1000);

const signedUrl = getSignedUrl({

url: authUrl,

keyPairId: publicKey,

dateLessThan: expires,

privateKey: privateKey,

});

const command = new SendEmailCommand({

Destination: {

ToAddresses: [

email

]

},

Content: {

Simple: {

Body: {

Text: {

Data: `Log in here: ${signedUrl}!`,

Charset: 'UTF-8'

}

},

Subject: {

Data: 'Photo Archive Login Credentials for ' + email,

Charset: 'UTF-8'

}

},

},

FromEmailAddress: emailSender,

});

try {

await ses.send(command);

} catch (error) {

console.error(`Error sending email to '${email}': `, error);

throw error;

}

};Lots of code, and I personally would implement it differently but I wanted to keep the implementation as short as possible. Feel free to improve it.

It should actually be enough to configure just four settings in order to adapt it to your needs:

- Line 5: Set an email address that should be the sender of the mail with the confirmation link.

- Line 6: Set the hostname of your site, either a CloudFront hostname or your alternative domain.

- Line 7: Choose a unique prefix for further configuration values.

- Line 8: Choose a region for the other AWS services that have to be used, for instance Amazon Systems Manager and Simple Emai Service SES.

You can also change some URLs in line 10-12 but the defaults should be fine for you if you have followed the instructions given above.

For the rest of the code I will not explain every detail but just stick to the basics.

The actual handler is defined in line 28. It is invoked with the two arguments, event and context. The structure of CloudFront request events, actually Lambda@Edge events, is documented here: https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/lambda-event-structure.html. The second argument, the context, provides all kind of meta information about the function executing, that "provides methods and properties that provide information about the invocation, function, and execution environment", see https://docs.aws.amazon.com/lambda/latest/dg/nodejs-context.html. We do not need anything from the context object for our use case.

In line 32 we check that the user has given an email address. If not, we just redirect to the login page. As an improvement you could also add some consistency check here or strip whitespace off the input given.

The function needs the list of registered email addresses, the key pair id and the private key, so that it can sign the URL. These parameters are obtained in lines 43-50 from Amazon Systems Manager and they are cached in a variable for the time of the Lambda execution lifecycle. For simple cases, you could also just hardcode these values but that is less flexible, and hardcoding a private key in source code is not exactly best practice. But your mileage may vary.

Finally, in line 51 we check whether the email address provided, is one of the registered addresses that have been configured. The original concept by the two Amazon engineers chose a different approach and whitelisted entire domains instead of individual email addresses. That may make sense for organizations that have their own email domain but certainly not for ones that use addresses like @gmail.com.

If the address is valid, a signed URL is generated and sent via email to the address provided. You may ask why there is no else. That is your own decision. You could also redirect to some error page that states that the email address given is not registered. But why give that information? I personally prefer some neutral text like "If the email address provided is valid, you will receive an email soon" so that nobody can find out whether a certain email is valid or not.

Test the Function

The AWS Lambda Console allows for end-to-end testing but first you have to deploy the function. As soon as you make modifications to the code, you see a badge saying "Changes not deployed" and a button Deploy gets enabled. Click the button and wait until deployment of the code completes.

In order to test the function you also have to create test events. These events are objects provided as JSON that are used as the first argument to the handler function. We will start by defining an event that does not contain an email address or rather an empty email address. Click on the "Test" tab (not the button next to Deploy) which actually does not test but allows creating and editing test events.

Select Create new event, call it missingEmail, and overwrite the example JSON event with this one:

{

"Records": [

{

"cf": {

"request": {

"querystring": "email="

}

}

}

]

}Save the code by clicking Save. The button is a little bit hidden, at the top.

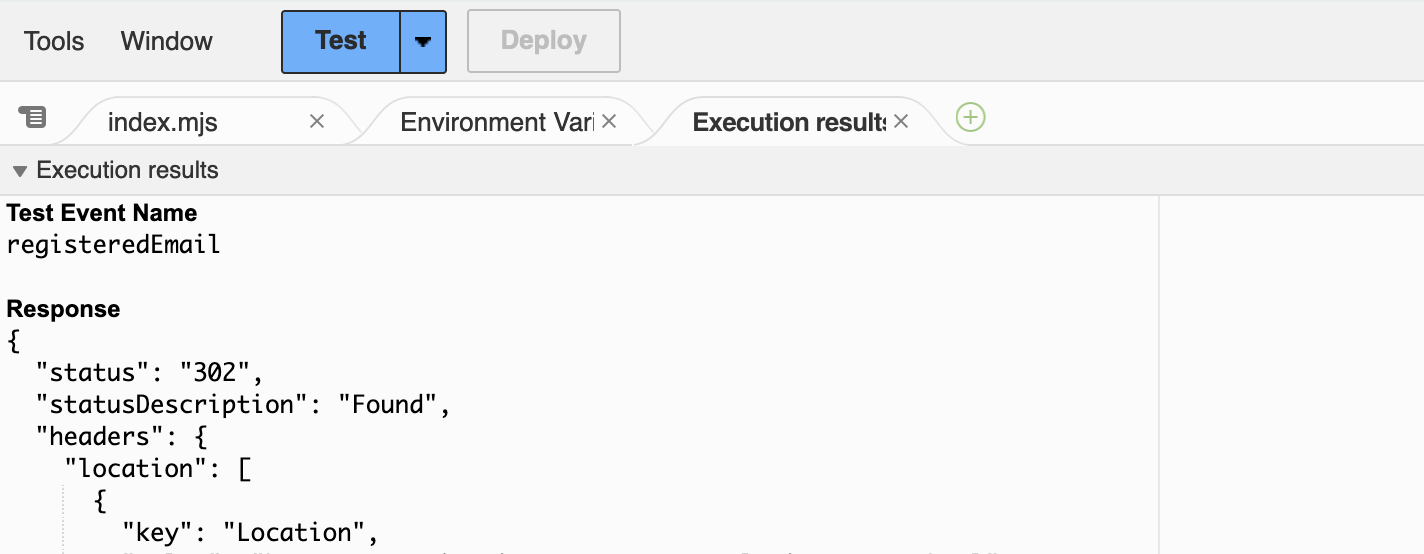

Switch back to the code tab, make sure that the code is deployed, and hit Test. The output is divided into four sections "Test Event Name", "Response", "Function Logs", and "Request ID". You should see the response that we have generated for that case, a redirect to the login URL. So far, so good.

By the way, if you wonder where your code has vanished look at the little tabs above the editor:

In order to edit the code, you have to click the leftmost tab index.mjs because running the test will switch the tab to the Execution result tab on the right.

Go back to the Test tab now and create a second event wrongEmail:

{

"Records": [

{

"cf": {

"request": {

"querystring": "email=eve@example.com"

}

}

}

]

}Do not forget to save the event, go to the Code tab, click the dropdown right of the Test button, and select the new event "wrongEmail". Now hit Test. This time you get an AccessDeniedException with an error message like "User: arn:aws:sts::12345:assumed-role/photoLogin-role-7kt5upfa/photoLogin is not authorized to perform: ssm:GetParameter on resource: arn:aws:ssm:us-east-1:56789:* because no identity-based policy allows the ssm:GetParameter action". In plain English, the Lambda function is not allowed to get parameters from the AWS System Manager.

Create Systems Manager Parameters

When you create the Lambda function, AWS has automatically created an execution role for it. This is the personality of the function during execution. We now have to allow this role to get parameters from the Systems Manager. The error message suggests that you should allow the role to get all parameters from the Systems Manager. I would not recommend that but restrict read access only to the parameters actually needed.

Actually, you could also store the parameters in the AWS Secrets Store but that is more expensive and more complicated. Feel free to use it if your requirements differ.

For now, go to the AWS Systems Manager console, select Application Management | Parameter store and create 3 parameters.

The first one must be called /photos/keyPairId. The slashes allow you to create a hierarchy. Use Standard as the tier, type String, and enter the id of the public key you have created into the value input. You can find the id in your CloudFront distribution under Key management | Public keys.

Do not forget to save the value. If you prefer to use SecureString as the type, you have to change the code of the Lambda function and set the second parameter of the invocation of getParameter() to true.

Now create a second parameter /photos/privateKey, this time with type SecureString. Copy the contents of the file public_key.pem into the value field and save.

The third parameter /photos/registeredEmails should contain a comma-separated list of email addresses that should have access to the site. Do not invent test addresses or use placeholder domains like @example.com because the test will really send an email, and you do not want to send signed URLs around on the internet. This variable should also be of type SecureString (otherwise change the code).

You may wonder why not use the type StringList:

- Because there is no

SecureStringList. - A string list is also just a string consisting of comma-separated values.

Anyway, make sure to add an email address that you have access to so that you can test the functionality.

Grant Access to the Parameter Store

Now that we have created the parameters, we have their ARNs (Amazon Resource Names) and restrict the access of the function's role to these three parameters.

Go to the AWS IAM Console, Access management | Roles and search for photosLogin. Alternatively, run the test again and look for the exact name of the role in the exception message. Click the role name, then in the Permissions tab and select Create inline policy from the Add permissions dropdown.

The next page lets you specify permissions.

First, select Systems Manager as the service.

Next, under Actions allowed scroll a little bit down to Read, expand it, and check GetParameter.

Under Resources, make sure that Specific is selected, and click the Add ARNs link.

In the next dialog box select the region you are using, enter photos/* as resource parameter name and click Add ARNs. Then, click Next enter a policy name like "read-photos-email-parameters" and click Create policy.

When you now click the policy name, it should look like this in JSON:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "ssm:GetParameter",

"Resource": "arn:aws:ssm:us-east-1:ACCOUNT:parameter/photos/*"

}

]

}Go back to your Lambda function, select the wrongEmail event for testing, and you should now see a redirect to the /login-status.html as the result. But still no email had been sent because the address given is not stored in the parameter in Amazon Systems Manager.

Setup Email Sending

But now we want to see an email in our inbox. Create a new test event like this:

{

"Records": [

{

"cf": {

"request": {

"querystring": "email=you@example.com"

}

}

}

]

}As a smart reader, you have probably already guessed that you have to replace you@example.com with your own email adress that you have configured in the last step.

Save the event and run a test with it. Boum! We get the next AccessDeniedException, this time something like this:

"errorMessage": "User: arn:aws:sts::12345:assumed-role/photoLogin-role-7kt5upfa/photoLogin is not authorized to perform `ses:SendEmail' on resource `arn:aws:ses:us-east-1:403172062108:identity/you@example.com'",

Okay, we know what we have to do. We have to allow the Lambda execution role to send emails with AWS Simple Email Service. Follow the steps above to add an inline policy. Select SES v2 as the service (SES v2! Not just SES!), for allowed actions check SendEmail from the actions of access level Write, and under Resources check All, not Specific.

Depending on your use case, you may be able to further restrict the permission. But in general you want to allow the role to send emails to arbitrary addresses. You could also specify a template. But this is outside the scope of this document.

Click Next, give a meaningfule name like send-photos-emails and hit Create policy.

Run the test again, and the next problem will likely pop up in the form of a MessageRejected exception: "Email address is not verified. The following identities failed the check in region US-EAST-1: you@examplen.com". The reason is that Amazon requires you to validate email addresses before you can use them as sender email addresses.

Validate Email Address

Open the Amazon Simple Email Service aka SES console, and click Configuration | Identities and then Create Identity. Choose Email address as the identity type, enter the address, and hit Create Identity.

Check your inbox for a mail from Amazon Web Services and click the confirmation link. Back at the SES console you should now see that the send email address is verified.

Try the test for the Lambda function again, and now you should finally find an email in your inbox asking you to click a very long confirmation link. Clicking the link will still not work because it points to /auth and we have not configured that endpoint yet.

If you plan to use a sender address like do-not-reply@example.com, you have to verify the domain instead of that email address because this address is obviously not configured for receiving mails. Consult the SES documentation for details about domain verification.

Move out of the SES Sandbox

When you develop your site, chances are that your sender and recipient email address coincide. So it is enough to just verify this address.

When you start adding more recipient addresses, you may run into the same problem as before and, once more get a MessageRejected exception: "Email address is not verified. The following identities failed the check in region US-EAST-1: my.friend@example.com".

When you start using SES in a region, you are automatically put into a sandbox. You can verify that by logging into the Amazon SES console and navigating to Account Dashboard. If you are in the sandbox, you will see an info box with instruction about how to move out of the sandbox.

One of the restrictions of that sandbox is that you also have to verify recipient addresses, not just sender addresses. This, of course, voids the purpose of our application. Therefore, click the View Get Set up page button in the info box and follow the instructions. Amazon manually processes the request and you may have to wait up to 24 hours for a reply. For further details, please refer to the relevant part of the Amazon SES documentation.

Until your account has been moved out of the sandbox, you have to use a verified email address both as sender and recipient email.

Associating the Lambda@Edge Function

We now have a Lambda function that creates the signed URL but it is not being triggered. The configuration for that must be done in the behaviour of the CloudFront configuration. You may have already found these settings at the bottom of the behaviour page in the Functions association section.

Viewer vs. Origin Requests/Responses

In that section you will find four hooks: for viewer requests, viewer responses, origin requests, and origin responses. What is meant by that?

Visitors of your site are called viewers, and the requests that they send to an edge server are called viewer requests. The edge server then either has a cached response or forwards the request to the configured origin, either an S3 bucket or another website. This is called the origin request.

The origin response is the response from the origin to the edge server, and the viewer response is the response of the edge to the visitor aka viewer.

You could theoretically associate our login handler with the origin request but it is more efficient to do that with the viewer request because the edge server does not need to contact the origin for handling the request.

Publish a New Version

Every Lambda function has a common ARN (its unique identifier) and one for every published version. They only differ in a trailing colon : followed by an integer version number.

But you first have to publish a version. Go to the Lambda function, make sure that everything is deployed, and click the Versions tab. Click the Publish new version button, enter an optional description like "initial version", and hit Publish version.

The view that you see now looks pretty much like the one that we are used to but there is a subtle difference. The tabs Versions and Aliases have vanished and in the code source preview you see an info box saying "You can only edit your function code or upload a new .zip or .jar file from the unpublished function page".

In the function overview you should see the ARN of this specific version. Copy it to the clipboard because we need it in the next step. If you want to edit the code from this view, you have to scroll to the top of the page, and in the breadcrumb navigation click the name of your function.

Associate the Lambda Function with the Viewer Requests

Go back to the behaviour configuration for /login and scroll down to Function associations. Choose Lambda@Edge as function type, copy the function ARN into the input at the right, and click Save changes.

Most likely you will see an error message like "The function execution role must be assumable with edgelambda.amazonaws.com as well as lambda.amazonaws.com principals. Update the IAM role and try again. Role: arn:aws:iam::ACCOUNTID:role/service-role/photosLogin-role-123xyz".

Go into the IAM, find the role as described above, and this time change the tab from Permissions to Trust relationships and edit the policy to look like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": [

"lambda.amazonaws.com",

"edgelambda.amazonaws.com"

]

},

"Action": "sts:AssumeRole"

}

]

}AWS had automatically created that policy when it created the function execution role. But in order to execute it as a Lambda@Edge service, you have to add the prinicipal service edgelambda.amazonaws.com, see line 9. Initially, the Service is a string, and you have to turn it into an array. You can also just copy and paste the policy from here.

Go back to the behaviour configuration for /login, save the changes, and this time it should succeed. Just in case, if you do not specify a specific version of your function, you will see an error message "The function ARN must reference a specific function version. (The ARN must end with the version number.) ARN: arn:aws:lambda:us-east-1:ACCOUNTID:function:photosLogin". Copy the ARN of a version into the field, and the error should vanish.

Time to try it out. Point your browser to /login.html of your site (the action attribute of the login form should point to /login without the .html), enter a valid email address, and submit the form. You should be redirected to the /login-status.html page and receive a signed URL in your inbox.

The Authentication Lambda Function

We need at least one more endpoint because the signed authentication URL currently points to lala-land.

Code

Go to the AWS Lambda console and create a new function photosAuth or similar. You could save a little bit of trouble by changing the default execution role, select Use an existing role, and select the default exection role of the login function. Decide yourself.

Overwrite the stub implementation with this:

import { SSMClient, GetParameterCommand } from '@aws-sdk/client-ssm';

import { getSignedCookies } from '@aws-sdk/cloudfront-signer';

const hostname = 'www.example.com';

const prefix = 'photos';

const region = 'us-east-1';

const cookieTTL = 30 * 24 * 60 * 60 * 1000;

const useSessionCookies = false;

const baseUrl = `https://${hostname}`;

const ssm = new SSMClient({ region });

const cache = {};

export const handler = async (event) => {

cache.keyPairId ??= await getParameter('keyPairId');

cache.privateKey ??= await getParameter('privateKey', true);

const { keyPairId, privateKey } = cache;

const expires = new Date(new Date().getTime() + cookieTTL);

const expiresUTC = expires.toUTCString();

const policy = JSON.stringify({

Statement: [

{

Resource: `${baseUrl}/*`,

Condition: {

DateLessThan: {

'AWS:EpochTime': Math.floor(expires.getTime() / 1000),

},

},

},

],

});

const signedCookie = await getSignedCookies({ keyPairId, privateKey, policy });

const cookies = [];

Object.keys(signedCookie).forEach((key) => {

cookies.push(getCookieHeader(key, signedCookie[key], expiresUTC));

});

return {

status: '302',

statusDescription: 'Found',

headers: {

location: [{

key: 'Location',

value: `${baseUrl}/`,

}],

'cache-control': [{

key: "Cache-Control",

value: "no-cache, no-store, must-revalidate"

}],

'set-cookie': cookies,

},

};

};

const getParameter = async (relname, WithDecryption = false) => {

const Name = `/${prefix}/${relname}`;

const command = new GetParameterCommand({Name, WithDecryption});

try {

const response = await ssm.send(command);

return response.Parameter.Value;

} catch (error) {

console.error(`Error retrieving parameter '${Name}': `, error);

throw error;

}

};

const getCookieHeader = (key, value, expiresUTC) => {

const fields = [

`${key}=${value}`,

'Path=/',

'Secure',

'HttpOnly',

'SameSite=Lax',

];

if (expiresUTC) fields.push(`Expires=${expiresUTC}`);

return {

key: 'Set-Cookie',

value: fields.join(';'),

}

}The only things that you should have to modify are lines 4-8.

As the hostname, either choose the CloudFront name like abc123.cloudfront.com or the alternative domain if you have one.

The prefix must be the same as for the login function.

Choose whatever region fits you best.

The cookieTTL is the lifetime of the cookie in microseconds, in this case 30 days. After that, the cookie will expire. Users could still try to modify the expiry date manually in their browser but it is also part of the signed policy. Although there is now way preventing a tampered cookie being sent, CloudFront would simply fail to verify the signature and reject the request.

Finally, in line 8 you can enable session cookies by setting useSessionCookies to true instead of false. In this case, the signature will still expire after cookieTTL microseconds but the browser is requested to discard the cookie as soon as the browser window is closed. In other words, the cookie is valid until the expiry date has reached or until the user closes the browser window, whatever happens first.

Configuration of the Authentication Functdion

Some of the stuff that was needed for the login function, has also to be done for the authentication function. We just list these tasks here. See The Login Lambda Function for details on how to perform the individual tasks.

They are:

- Deploy the code of the function!

- Publish a version of the function!

- Give the the default execution role of the function access to the Systems Manager Parameters!

- Add the service principal

edgelambda.amazonaws.comto the trust relationships of the default execution role! - Associate the function with the viewer requests of the

/authbehaviour of the CloudFront distribution.

Now log in again, wait for the mail, click the link and you should be able to surf the site for 30 days.

Test

When you look closely at the code of the authentication function, you notice that it does not even look at the event. You can therefore just create a default event, or one that contains just {} and then hit Test.

You should now see a redirect to the root of your site, and three set-cookie headers CloudFront-Policy, CloudFront-Key-Pair-Id, and CloudFront-Signature.

Troubleshooting

It is more than likely that you run into unexpected errors that are not described here. Feel free to leave a comment for that case but it is better to be able to debug the problem yourself.

CloudWatch Logs

In the "IDE" section of the Lambda Functions, there is a tab Monitor that we have not mentioned yet. It provides all kinds of metrics for the function but also a button View CloudWatch Logs that brings you to the AWS monitoring service.

Whenever you run a test execution of the function, you will see a new entry in the us-east-1 region of CloudWatch. But you also see them in the Execution result tab after you run the test.

But what if a triggered execution does not work? You may be lucky and see a log entry for it. But you have to find it. When you are sending a request to your site, you will use an edge server that is close to you, and the events are available in CloudWatch for the region that the edge server belongs to.

But even if you know or guess the right region, you will probably still not see any logs. The reason for that is a little bit hidden in a section Edge Function Logs of the CloudFront developer documentation:

... CloudFront delivers edge function logs on a best-effort basis. The log entry for a particular request might be delivered long after the request was actually processed and, in rare cases, a log entry might not be delivered at all.

Well, in my experience, the rare case is not the exception but the rule, although, maybe I have not looked hard enough for the log entries. Anyway, do not expect a log entry to appear any time soon after the Lambda invocation was triggered.

Create Test Events

A better strategy is to create more test events that match the situation where you have encountered the problem. You will get immediate feedback that may help you to find the issue. One such example could be a typo in email addresses that you add to the list of registered emails.

Use curl

The tool curl is extremely useful for debugging Lambda@Edge functions. It is available for all operating systems, and also for Microsoft "Windows". It also ships with Git Bash for "Windows".

For example, if you want to test the /login endpoint, you could do so like this:

$ curl -v https://www.example.com/login?email=you@example.com

* Trying N.N.N.N:443...

* Connected to www.example.com (N.N.N.N) port 443

* ALPN: curl offers h2,http/1.1

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* CAfile: /path/to/share/curl/curl-ca-bundle.crt

* CApath: none

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.3 (IN), TLS handshake, Encrypted Extensions (8):

* TLSv1.3 (IN), TLS handshake, Certificate (11):

* TLSv1.3 (IN), TLS handshake, CERT verify (15):

* TLSv1.3 (IN), TLS handshake, Finished (20):

* TLSv1.3 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.3 (OUT), TLS handshake, Finished (20):

* SSL connection using TLSv1.3 / TLS_AES_128_GCM_SHA256

* ALPN: server accepted h2

* Server certificate:

* subject: CN=*.example.com

* start date: Nov 22 00:00:00 2023 GMT

* expire date: Dec 21 23:59:59 2024 GMT

* subjectAltName: host "www.example.com" matched cert's "*.example.com"

* issuer: C=US; O=Amazon; CN=Amazon RSA 2048 M03

* SSL certificate verify ok.

* using HTTP/2

* [HTTP/2] [1] OPENED stream for https://www.example.com/login?email=you@example.com

* [HTTP/2] [1] [:method: GET]

* [HTTP/2] [1] [:scheme: https]

* [HTTP/2] [1] [:authority: www.example.com]

* [HTTP/2] [1] [:path: /login?email=you@example.com]

* [HTTP/2] [1] [user-agent: curl/8.4.0]

* [HTTP/2] [1] [accept: */*]

> GET /login?email=you@example.com HTTP/2

> Host: www.example.com

> User-Agent: curl/8.4.0

> Accept: */*

>

* TLSv1.3 (IN), TLS handshake, Newsession Ticket (4):

< HTTP/2 302

< content-length: 0

< location: https://www.example.com/login-status.html

< server: CloudFront

< date: Wed, 07 Feb 2024 16:37:59 GMT

< cache-control: max-age=100

< x-cache: LambdaGeneratedResponse from cloudfront

< via: 1.1 4793c904d4c404e9b797f8328aa848d0.cloudfront.net (CloudFront)

< x-amz-cf-pop: SOG30-C1

< x-amz-cf-id: BCfk5fQb2bayCi00__yXUazQmrZCE18sUQmWetDnEqZujOATa4MaNA==

< x-xss-protection: 1; mode=block

< x-frame-options: SAMEORIGIN

< referrer-policy: strict-origin-when-cross-origin

< x-content-type-options: nosniff

< strict-transport-security: max-age=31536000

<

* Connection #0 to host www.example.com left intact

After the TLS negotation (the lines starting with *) you see the request that is sent (lines starting with <) and, more interestingly, the response from the server starting with >. The response in this case is a redirect to /login-status.html which is what we expect.

One thing that you should look out for are age: headers in the response that indicates that the response was being served from the cache. This is why we have still disabled all caching so that this cannot happen. But it will happen later in production.

Debugging Output into the Body or Customer Headers

But what if other things do not go right? For example, we do not get an email? Waiting for CloudWatch is probably not an option and therefore, adding debugging output with console.log() statements will not help much.

Especially while you are developing the solution, before you have published the URL, there is a simple alternative. You can simply put the debugging output into the body of the response. For example, look at this return statement from the handler function:

return {

status: '200',

statusDescription: 'Okay',

headers: {

'content-type': [{

key: 'Content-Type',

value: 'text/html'

}]

},

body: 'Email is not valid',

};Now you can even see the output in the browser.

Spamming the users with debugging output in the response may not be the best solution but for example for redirect responses they will actually not see that output but you can still see them with curl. But a better option for production is probably adding custom headers like x-debug-message.

But even with these tricks, debugging Lambda@Edge functions is not funny because you always have to:

- Change your code.

- Publish a new version.

- Change the function ARN in the function association of the behaviour.

- Wait for change of the behaviour to be deployed to the edges.

Optional: A Logout Function

Our solution currently has one flaw: You cannot log out of the site. This is a problem if you want to use it from a public computer. But implementing the logout feature should now be straightforward.

Logout Function Code

Create a function photosLogout (or whatever you choose) like this:

const loginUrl = 'https://www.example.com/login.html';

export const handler = async (event) => {

const cookies = [];

['CloudFront-Policy', 'CloudFront-Key-Pair-Id', 'Cloud-Front-Signature']

.forEach((key) => {

cookies.push(getCookieHeader(key));

});

return {

status: '302',

statusDescription: 'Found',

headers: {

location: [{

key: 'Location',

value: loginUrl,

}],

'cache-control': [{

key: "Cache-Control",

value: "no-cache, no-store, must-revalidate"

}],

'set-cookie': cookies,

},

};

};

const getCookieHeader = (key) => {

return {

key: 'Set-Cookie',

value: [

`${key}=`,

'Path=/',

'Expires=Thu, 01 Jan 1970 00:00:00 GMT',

'Secure',

'HttpOnly',

'SameSite=Lax',

].join(';'),

}

}The code should be pretty self-explanatory by now. We set the three CloudFront cookies CloudFront-Policy, CloudFront-Key-Pair-Id, Cloud-Front-Signature to an empty value and set the expiry Expires field to a date in the past. That is the conventional way of "deleting" cookies in the browser.

A safer approach may be to iterate over all cookies and "delete" them and not just the ones that you know about at this point in time.

Configuration of the Logout Function

When you associate the function with the behaviour /logout, you will run once more into the error that the "function execution role must be assumable with edgelambda.amazonaws.com as well as lambda.amazonaws.com principals". Open the lambda execution role in the IAM, and add edgelambda.amazonaws.com to the Trust relationships and wait a couple of seconds for the change to be propagated to CloudFront before you save the behaviour again.

Wait for the new behaviour configuration to be deployed. Then, when you click on the "Logout" link that we have added to the bottom of our HTML pages (or just visit https://www.example.com/logout), you should be redirected to the login page.

Final Considerations and Clean-Up

Everything is up and running now but you should make some minor changes before you start giving out the URL of your site to users.

Set Up Error Page for 403 Forbidden

If a user wants to visit a page without being authenticated, they should automatically be redirected to the login page. That is easy to achieve.

Click on your distribution in the CloudFront distribution list, click on Error pages and then on Create custom error response. Select the error code 403: Forbidden and set Customize error message to yes.

Set the response page path to /login.html so that users are prompted for logging in instead of being presented an error message. Set the HTTP response code to 403 and save by clicking on Create custom error response.

Wait for the change to be deployed, and all unauthenticated users should now be redirected to the login page.

Set Up Error Page for 404 Not Found

The current setup has a little flaw. If a user enters an invalid URL or you have a broken link, they will also get a 403 and are redirected to the login page although they are authenticated. This sounds a little bit illogical but that is the way that Amazon does it.

But that is what have created the HTML page /404-not-found.html in the beginning. Create a 2nd custom error page for the error code 404, and point it to that page.

The /index.html Problem

When you create a CloudFront distribution, you are asked for a "default root object" and you will usually enter index.html here. The effect is that for a request to https://www.example.com/ the top-level file index.html will be returned.

You will probably expect that for a request to https://www.example.com/about/, the contents of /about/index.html will be returned but unfortunately, this is not the case. For some reason, Amazon allows this only for the root.

You basically have two options. Either change your HTML to always link to the actual object /subdir/index.html instead of /subdir/. I am using the static site generator Qgoda where this is easy to configure: You just change the value of the top-level configuration variable permalink from its default {significant-path} to /{location} and all generated links now point to the full path. You have to check whether your content management system has a similar setting.

The other possibility is to create a new behaviour for the path pattern */ that sends a redirect to the index.html file at this location. You should now know how to do this.

Hopefully, Amazon will lift that restriction one day but for the time being you are stuck with one of these two workarounds.

The problem has actually little to do with authentication but while you are implementing the authentication, you may run into it without noticing: If you have already restricted viewer access to your content but do not have a dedicated 404 error page, then you will get a 403 error even if the viewer sends the signed cookies with the request.

Enable Caching

Remember that we had disabled caching for all behaviours? The idea was to get that out of the way as an error reason during development. Now it is time to change that setting.

Go to the behaviour Default (*) of the CloudFront distribution, and scroll down to Cache key and origin requests. Change it from CachingDisabled to CachingOptimized. You should also review the policy by clicking on View policy.

By default, the minimum TTL of the cache is 1 second, the default TTL is one day, the maximum TTL is one year. The default for the cache key settings is none, neither for headers, cookies, nor query strings.

I must admit that I have not fully understand whether this really has an impact on the security of the solution outlined here. The original blog post states that the cache TTL should match the cookie TTL but they do not mention whether that is the maximum or default TTL.

Anyway, this recommendation does not match my observations. To me it seems that the cookie signatures (and the relevant query parameters in case of signed URL) are always checked before the decision is taken whether to serve a response from the cache or send a new request to the origin. But in doubt, try it out yourself.

You can now enable caching for the other behaviours as well, in the same manner as described above.

Removing Users

What can you do in order to remove a user? With the current setup, there is no way to do that except for changing the encryption key so that the signed cookies become invalid. Otherwise, you just have to wait until they expire.

If you want to implement revoking of permissions, you have to set two more cookies. One, containing the email address and a second one containing a signature of the email address, pretty much what Amazon itself is doing for creating signed cookies with the policy. It would be easer if you could simpoly add the user's email address to the custom policy that is signed for the CloudFront cookies but I am not aware of such a possibility.

You then have to associate another Lambda function to the default behavior that verifies that signature and checks whether the email address provided is still in the list of registered emails. But that will cost a little bit of performance and also money because the code will be executed on every page view.

Keeping Users Logged In

In the current setup, people can surf the site for n days and will then be kicked out without warning. It can happen that they request a page successfully and a couple of seconds later they are redirected to the login page.

The obvious way to handle that is again a Lambda function associated with the default behaviour that checks whether the user's cookie is about to expire and then issues a new one. The same problems as above exist: It slows things down and costs money.

If you know that people will always enter your site via the start page /, you can create a behaviour just for / with that functionality. That is not a perfect solution but will work in many cases.

Scaling

Remember that we store the registered email addresses as a comma-separated list which does not really scale and is also not easy to maintain. If your user base grows, you should consider storing the users in a database or maybe distributed over some files in an S3 bucket. You could also integrate Amazon Cognito or similar services.

Conclusion

If you want to restrict access to a private website for a manageable number of users, the solution presented here is secure, efficient and user-friendly, especially because it works without credentials. Adding password based authentication should be straightforward and can be done in a similar and even simpler way.

Leave a comment

Giving your email address is optional. But please keep in mind that you cannot get a notification about a response without a valid email address. The address will not be displayed with the comment!